Retrieving answers from a batch of questions

You've created a bulk question job, now it's time to gather all of the results the model generated!

So, you've gathered your documents, asked a bulk question, and now you want to see the answers predicted by the model for each document. Easy enough! Our Answers API makes it incredibly flexible for users to find these answers and generate reports based on the parameters they are interested in viewing.

Below, we'll show you a tutorial of how we can retrieve the answers generated from a demo batch built in Curate.

Part 1. Set Up

Import Dependencies

To start, let's upload our dependencies.

# add dependencies

import layar_api

from layar_api.rest import ApiException

import requests

from pprint import pprint

Configure Authentication

Next, we'll want to configure our session with our authentication keys. Copy the following commands and only swap out the strings for base_host, client_id, and client_secret. The base_host is the Layar instance you're working within (e.g. 'demo.vyasa.com'), and the client ID and secret are your provided authentication keys.

To learn how to get your authentication keys, please reference this document.

# set up your authentication credentials

base_host = 'BASE_URL' # your Layar instance (e.g. 'demo.vyasa.com')

client_id = 'AbcDEfghI3' # example developer API key

client_secret = '1ab23c4De6fGh7Ijkl8mNoPq9' #example developer API secret

# configure oauth access token for authorization

configuration = layar_api.Configuration()

configuration.host = f"https://{base_host}"

configuration.access_token = configuration.fetch_access_token(

client_id, client_secret)

# Make your life easier for the next task: instantiating APIs!

client = layar_api.ApiClient(configuration)

Instantiate Your APIs

Finally, we'll want to instantiate the APIs we are going to call in future commands. We'll be using several API classes for this tutorial: the QuestionApi, the SourceDocumentApi, and finally the AnswerApi.

# Instantiate APIs

sourceDocApi = layar_api.SourceDocumentApi(client)

questionApi = layar_api.QuestionApi(client)

answerApi = layar_api.AnswerApi(client)

Identify Your Data Sources

Finally, make a list of all the data providers (Layar instances) where documents, questions, or answers may be coming from. In this tutorial, we are using a Curate batch that was generated on 100 clinical trial documents from master-clinicaltrials.vyasa.com and PDFs from our internal Layar system ('demo.vyasa.com', but using base_host as you see below will look up your base_host parameter that you provided before).

# Indicate the data sources where documents are coming from (I've added all, but it can just be your base_host)

data_sources = f"{base_host}, master-clinicaltrials.vyasa.com"

Set Up Complete

Awesome! You've got ducks in a row. Now, we're going to gather the answers for your Curate batch.

Part 2. Retrieve Answers

Gather All Question Keys for your Batch

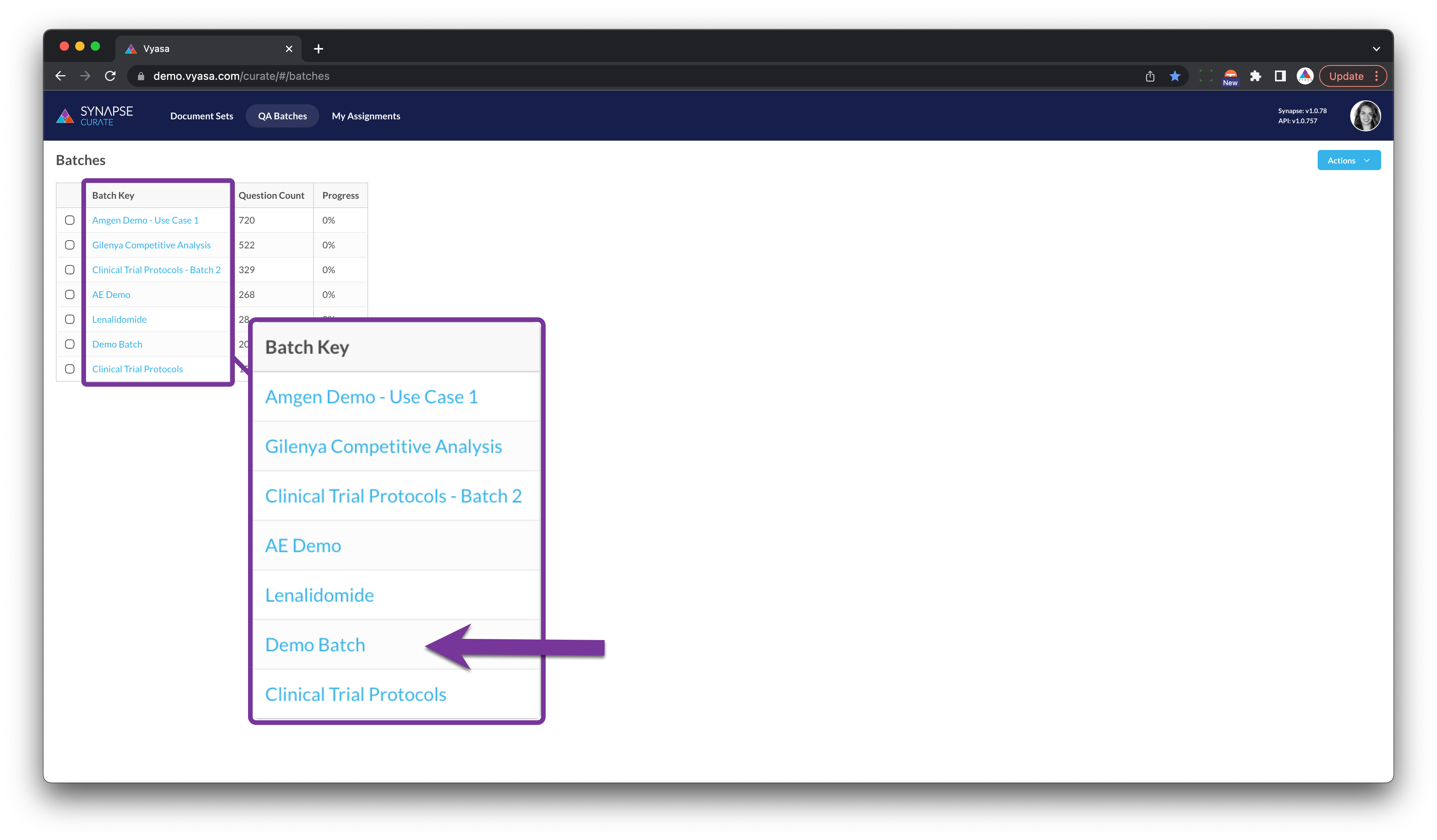

To start, you're going to want to want to know two pieces of information: the batch_key and the question_keys for each question you have in your batch. The batch_key can be found by heading over to the Curate tool and looking for the column 'Batch Key' (see photo below). Each row in your QA Batches main table is a batch.

We'll be using 'Demo Batch' as our example batch to retrieve answers from.

This can be plugged in as your batch_key (see below code snippet).

# Input your batch key to retrieve information from

batch_key = "Demo Batch" # Input the Curate batch key name.

batch_keys = ["Demo Batch", "Lenalidomide"] # Use this parameter instead if querying multiple batches

Getting Answers from Multiple Batches?

If you're trying to get answers from many batches (e.g. from both 'Demo Batch' and 'Lenalidomide' in the photo above), you can instead create a batch_keys list instead. We've provided both methods above for you.

Gather the Question Keys for the Provided Curate Batch(es)

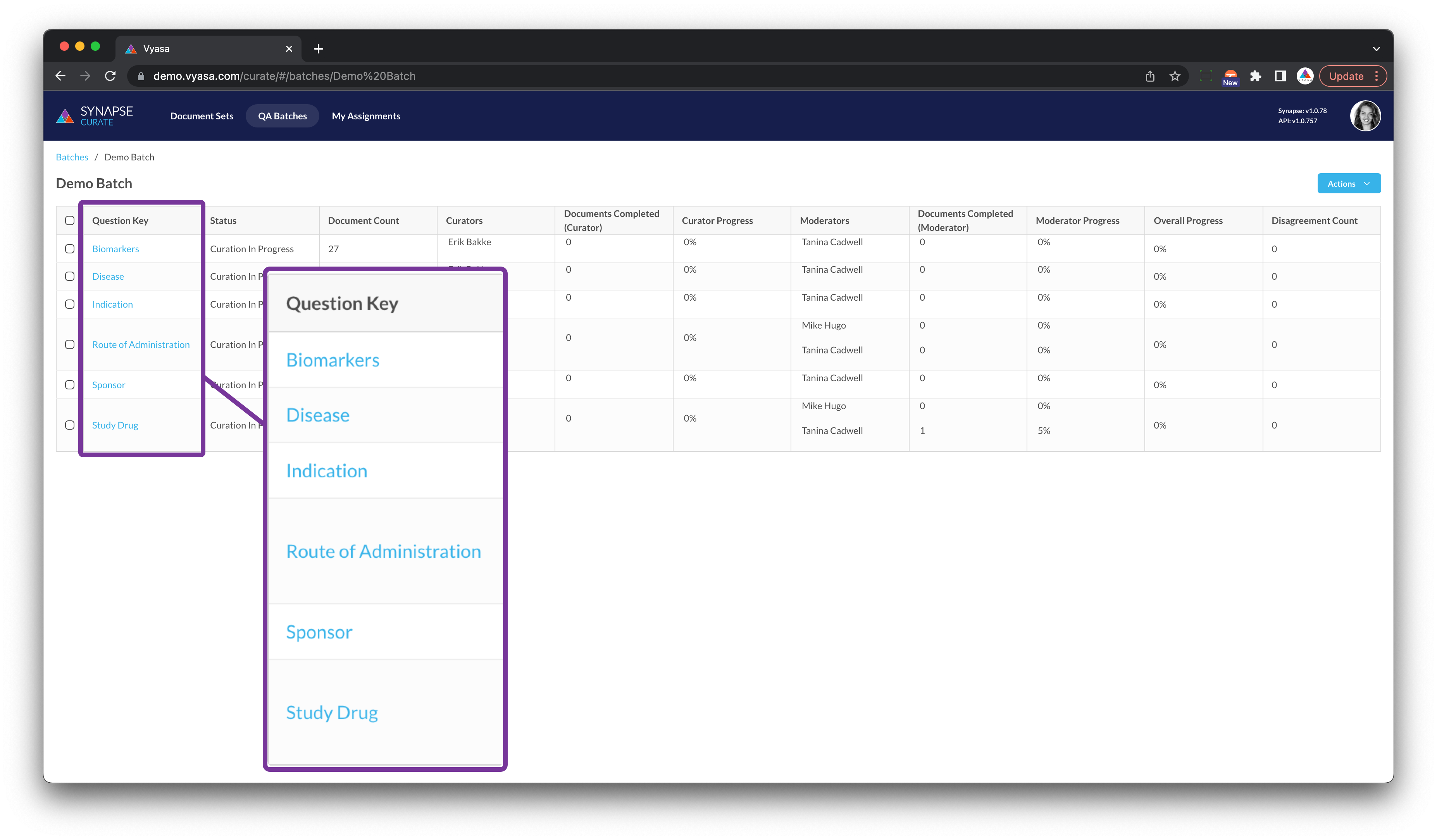

Now that you have identified your batch(es), you can get all of the question keys. A question key is the sub-batches you see when you click into a batch that designate a specific question that was asked of those documents.

For example, when I click into the 'Demo Batch' I can six questions have been already asked in that batch, and that there are six question keys in that batch ('Biomarkers', 'Disease', 'Indication', etc.).

In this 'Demo Batch', you can see six question keys available: Biomarkers, Disease, Indication, etc.

You can find these question keys programmatically as well. We have it available as a recipe, but we've also outlined the steps pertaining to this tutorial below:

# Remember the batch key from before?

batch_key = 'Demo Batch' # Input the Curate batch key name.

# Get the Question Keys for a bulk question batch

body = layar_api.QuestionSearchCommand(

batch_grouping_key=batch_key,

rows=50 #Input how many question keys you want to pull (optional).

)

try:

question_count_response = questionApi.get_question_field_counts(body,'questionKey')

question_keys = list(map(lambda q:q.key, question_count_response))

for key in question_keys:

print(f"question key: {key}")

except ApiException as e:

print("Exception when calling QuestionApi->start_batch: %s\n" % e)

# Remember the batch key from before?

batch_keys = ['Demo Batch', 'Lenalidomide'] #Note we've changed this parameter into a list

### Get the Question Keys for a bulk question batch

body = layar_api.QuestionSearchCommand(

batch_grouping_keys = batch_keys # Note the plural parameter option!

rows=50 #Input how many question keys you want to pull (optional).

)

try:

question_count_response = questionApi.get_question_field_counts(body,'questionKey')

question_keys = list(map(lambda q:q.key, question_count_response))

for key in question_keys:

print(f"question key: {key}")

except ApiException as e:

print("Exception when calling QuestionApi->start_batch: %s\n" % e)

You should see a response in your terminal similar to what you see below.

question key: Biomarkers

question key: Disease

question key: Indication

question key: Route of Administration

question key: Sponsor

question key: Study Drug

Success!

You now have your batch key and your question keys! Now it's time to gather all the questions that were asked under each question key.

Gather all Questions Under Each Question Key

For starters, you'll want to set a few limiters and default parameters.

- The

offsetparameter lets the API know what document of this question key's batch you wish to start on (if it's the first document of the batch, set it to offset = 0). - The

batch_sizereturns x many results per host (ourdata_sourcesparameter configured above). If you have a question key that asked questions across multiple data sources, this parameter will go into your question key batch and gather up to 50 documents from yourbase_hostinstance, then 50 from the clinical trials instance, and so on.

Pro Tip: Batch size is a handy tool for building an even training dataset or model performance report, where you want to have an even number of results across each of the data sources you interrogated.

# Define batch result parameters

offset = 0 # What document of the batch you want to start on (if the first document of the batch, offset = 0) -> important for pagination

batch_size = 50 #API will return up to this number, **per host**

Submit the Big Query

Next we're going to build and execute the query that gathers all of the documents for the set you used to ask your question (see "Submitting a bulk question job").

There are a lot of parameters and calls being made here, so we'll talk about each section at a time. But for starters, here's the long block of code to make the request:

while True:

# Build and execute the query for the documents in the saved list

body = layar_api.SourceDocumentSearchCommand(

saved_list_ids=['AYAJ2QNF9inKGTZtewDd'],

start=offset,

rows=batch_size,

sort='datePublished',

sort_order='desc',

source_fields=['name', 'id', 'documentURI'])

x_vyasa_data_providers = data_sources

documents = sourceDocApi.search_documents(body, x_vyasa_data_providers)

# Loop over the docs, writing the answers as we go

for document in documents:

row_details = [

document.id,

document.name,

document.document_uri

]

# Go get all answers for this document + batch

body = layar_api.AnswerSearchCommand(

batch_grouping_key=batch_key,

single_doc_question_document_id=document.id,

with_concepts=False,

rows=200

)

all_answers_for_doc = answerApi.search_answer(body=body)

total_answers = int(answerApi.api_client.last_response.getheader('x-total-count'))

if total_answers > 200:

print(f"Unexpectedly found a lot of answers to {len(question_keys)} questions for doc {document.id}")

# Get the answers per question key

for key in question_keys:

answers_for_q_key = [a for a in all_answers_for_doc if a.question_key == key]

if answers_for_q_key:

# Get the top 3 answers

answers_for_q_key.sort(reverse=True, key=lambda a: max(a.evidence, key=lambda e: e.probability).probability)

answers_for_q_key = answers_for_q_key[:3]

else:

answers_for_q_key = []

row_details.append(', '.join(map(lambda a: f"{a.text} ({a.evidence[0].probability})", answers_for_q_key[:3])))

# Print Results

print(row_details)

# Start Next Document (Loop)

offset = offset + batch_size

if len(documents) == 0:

break

Breakdown of Above Query

Let's make this easier to follow. For each document in that question key, here's what's happening:

- First, we are using the source_documents method of the

SourceDocumentApito find all of the documents that meet thebodysearch criteria anddata sources.

- The

bodyof the source_documents method is the SourceDocumentSearchCommand, which has an enormous variety of optional parameters you can use as search criteria. - The

x_vyasa_data_providersis the same thing as thedata_sourcesparameter we configured earlier, so we'll be referencing that variable here (line 8).

# SourceDocumentSearchCommand Parameters

body = layar_api.SourceDocumentSearchCommand(

saved_list_ids=['AYAJ2QNF9inKGTZtewDd'],

start=offset,

rows=batch_size,

sort='datePublished',

sort_order='desc',

source_fields=['name', 'id', 'documentURI'])

# Data Sources

x_vyasa_data_providers = data_sources

# API Call to search_documents endpoint

documents = sourceDocApi.search_documents(body, x_vyasa_data_providers)

- Next, we are creating a dictionary

row_detailsthat will hold each documents' Layar ID, document name, and Layar URL. You can add as many document parameters as you're interested in -- the full list can be found here.

Because we're doing this in one big loop, we are looking at one document at a time, starting with offset = 0 (the first document).

# Loop over the docs, writing the answers as we go

for document in documents:

row_details = [

document.id,

document.name,

document.document_uri

]

- Using the search_answer endpoint for the AnswerApi, we're going to get all of the answers for this document, in the context of a single batch.

- You can use the AnswerSearchCommand parameters to specify criteria that an answer must meet in order to be returned as a result

- If you have multiple batches, you will need to create a body for each batch you're looking at. Reminder: batches are not question keys -- they are one level higher. We'll organize question keys in the next step.

# Go get all answers for this document + batch

body = layar_api.AnswerSearchCommand(

batch_grouping_key=batch_key,

single_doc_question_document_id=document.id,

with_concepts=False,

rows=200

)

all_answers_for_doc = answerApi.search_answer(body=body)

total_answers = int(answerApi.api_client.last_response.getheader('x-total-count'))

if total_answers > 200:

print(f"Unexpectedly found a lot of answers to {len(question_keys)} questions for doc {document.id}")

- Now for question keys! In this step, we're sorting the answers for those documents in the specific context of the specified question_keys.

For each question_key identified earlier, you are:

- (1) first looking to see if an answer exists for that document in that question key

- (2) If there are answers, you're sorting the answers based on their probability score. If there are no answers, nothing gets added to the

answers_for_q_keylist. - (3) Of those answers, you are pulling the Top 3 answers from the list. Note: if you want all answers, you can remove the number 3 (

[:]), if you want more or less you can change the number accordingly (Top 2 =[:2], Top 10 =[:10], etc.). - (4) Finally, those pulled answers get appended to your

row_detailsfor that specific document, in that specific question key.

# Get the answers per question key

for key in question_keys:

answers_for_q_key = [a for a in all_answers_for_doc if a.question_key == key]

if answers_for_q_key:

# Get the top 3 answers

answers_for_q_key.sort(reverse=True, key=lambda a: max(a.evidence, key=lambda e: e.probability).probability)

answers_for_q_key = answers_for_q_key[:3] #Change if you want more/less answers

else:

answers_for_q_key = []

row_details.append(', '.join(map(lambda a: f"{a.text} ({a.evidence[0].probability})", answers_for_q_key[:3])))

- Finally, we print the results of the

row_detailsfor that document, and then change the offset to the be the next document in line, up until we meet thebatch_sizespecified above.

# Print Results

print(row_details)

# Start Next Document (Loop)

offset = offset + batch_size

if len(documents) == 0:

break

Results

This is what your results will look like, if you used the parameters displayed above:

['trials_NCT02753283', 'Sustaining Skeletal Health in Frail Elderly', 'https://clinicaltrials.gov/ct2/show/study/NCT02753283', 'raloxifene (0.9819506033673466), placebo (0.6789128735623943), fracture reduction trial (0.4484393797528467)']

With the documents Layar ID, document name, and Layar URL followed by the list of answers and their respective probability (e.g. 'raloxifene (0.98195)').

Success!

Now you have a list of responses with the answers generated as part of that batch. Fantastic!

Part 3. Export Results to a CSV (Optional)

Instead of retrieving your results as a response in the console, you can write your results to a CSV instead. You can use any CSV library for this, but we've used the standard python csv package below.

Heads Up

To save you from looking at too many redundant lines of code, we've shortened the script down to the specific blocks in the operation that will be added or modified. We'll be referencing the above large script to help guide you as to where these new lines of code are being added.

At the end, it will all be put together into one final script, which you can reference and modify to your needs!

Import CSV Package

In a new line within your dependencies block, import the python csv package.

import csv

Make Query an Operation (Optional)

Next, since the query iterates through each answer in the same call, we'll want to wrap the entire query into an operation to make it easier when calling this script in the future.

We've only provided the guideline comments to help you reference the larger query above, and to show you how the formatting works when wrapped within an operation definition. However, you will need the full code from above.

def answerReport():

## Section 1: Set Up

# Instantiate APIs

# Indicate the data sources where documents are coming from

# Input your batch key to retrieve information from

## Section 2: Retrieve Results

# Get the Question Keys for a bulk question batch

# Define batch result parameters

# Build and execute the query for the documents in the saved list

# Loop over the docs, writing the answers as we go

# Go get all answers for this document + batch

# Get the answers per question key

# Get the top 3 answers

# Print Results

# Start Next Document (Loop)

Create CSV File

After instantiating your APIs, defining your data sources, and defining which batch key you are using, you're going to create a CSV file to write the API responses into.

Let's call the CSV file resultsReport.

Since you want to write the responses for everything below this script (the question keys, the source document details, the answers, etc.), the CSV writer must remain open during those lines of the script. To do this, you 'wrap' your question & answer queries into the CSV writing command, and everything gets indented accordingly.

def answerReport():

## Section 1: Set Up

# Instantiate APIs

# Indicate the data sources where documents are coming from

# Input your batch key to retrieve information from

try:

with open('resultsReport.csv','w', newline='') as myfile:

wr = csv.writer(myfile, quoting=csv.QUOTE_ALL, delimiter = ';')

## Section 2: Retrieve Results

# Get the Question Keys for a bulk question batch

# Define batch result parameters

# Build and execute the query for the documents in the saved list

# Loop over the docs, writing the answers as we go

# Go get all answers for this document + batch

# Get the answers per question key

# Get the top 3 answers

# Print Results

# Start Next Document (Loop)

except ApiException as e:

print("Exception when calling SourceDocumentApi->search: %s\n" % e)

Identify Table Headers

Next, you'll want to specify what headers you want in your CSV. After gathering your question_keys (lines 13-25) and just before defining your offset and batch_size parameters (lines 27-29), you'll write your CSV headers.

def answerReport():

## Section 1: Set Up

# Instantiate APIs

# Indicate the data sources where documents are coming from

# Input your batch key to retrieve information from

try:

with open('resultsReport.csv','w', newline='') as myfile:

wr = csv.writer(myfile, quoting=csv.QUOTE_ALL, delimiter = ';')

## Section 2: Retrieve Results

# Get the Question Keys for a bulk question batch

# Define batch result parameters

# Build and execute the query for the documents in the saved list

# Write the header row for the spreadsheet

wr.writerow(['Layar ID', 'Document Title', 'Layar URL'] + question_keys)

# Loop over the docs, writing the answers as we go

# Go get all answers for this document + batch

# Get the answers per question key

# Get the top 3 answers

# Print Results

# Start Next Document (Loop)

except ApiException as e:

print("Exception when calling SourceDocumentApi->search: %s\n" % e)

We've included the Layar ID, Document Title, URL, and a column for the answers of each question_key in that batch.

Want Other Parameters?

If you're interested in additional source document parameters, there are many you can pull into this report! Please reference this section to remember how to access those source document details, and add any additional headers for those parameters.

Write Results to CSV

Almost done! All you need is to write the row_details results to the CSV. We print the row_details to the console just before (this is optional). To write the same response to a CSV, add the additional wr.writerow(row_details) command.

def answerReport():

## Section 1: Set Up

# Instantiate APIs

# Indicate the data sources where documents are coming from

# Input your batch key to retrieve information from

try:

with open('resultsReport.csv','w', newline='') as myfile:

wr = csv.writer(myfile, quoting=csv.QUOTE_ALL, delimiter = ';')

## Section 2: Retrieve Results

# Get the Question Keys for a bulk question batch

# Define batch result parameters

# Build and execute the query for the documents in the saved list

# Write the header row for the spreadsheet

wr.writerow(['Layar ID', 'Document Title', 'Layar URL'] + question_keys)

# Loop over the docs, writing the answers as we go

# Go get all answers for this document + batch

# Get the answers per question key

# Get the top 3 answers

# Print Results

#Write the content to the CSV

wr.writerow(row_details)

# Start Next Document (Loop)

except ApiException as e:

print("Exception when calling SourceDocumentApi->search: %s\n" % e)

Final Script

Altogether, here's the final script (with the CSV blocks added in):

# add dependencies

import layar_api

from layar_api.rest import ApiException

import requests

from pprint import pprint

import time

import csv

# set up your authentication credentials

base_host = 'BASE_URL' # your Layar instance (e.g. 'demo.vyasa.com')

client_id = 'AbcDEfghI3' # example developer API key

client_secret = '1ab23c4De6fGh7Ijkl8mNoPq9' #example developer API secret

# configure oauth access token for authorization

configuration = layar_api.Configuration()

configuration.host = f"https://{base_host}"

configuration.access_token = configuration.fetch_access_token(

client_id, client_secret)

# Make your life easier for the next task: instantiating APIs!

client = layar_api.ApiClient(configuration)

def answerReport():

# Instantiate APIs

sourceDocApi = layar_api.SourceDocumentApi(client)

questionApi = layar_api.QuestionApi(client)

answerApi = layar_api.AnswerApi(client)

# Indicate the data sources where documents are coming from

data_sources = f"{base_host}, master-clinicaltrials.vyasa.com"

# Input your batch key to retrieve information from

batch_key = "Demo Batch"

# Get the Question Keys for a bulk question batch

body = layar_api.QuestionSearchCommand(

batch_grouping_key=batch_key,

rows=50 #Input how many question keys you want to pull (optional)

)

try:

with open('resultsReport.csv','w', newline='') as myfile:

wr = csv.writer(myfile, quoting=csv.QUOTE_ALL, delimiter = ';')

# Get the Question Keys for this bulk question batch

body = layar_api.QuestionSearchCommand(

batch_grouping_key=batch_key,

rows=50

)

try:

question_count_response = questionApi.get_question_field_counts(body,'questionKey')

question_keys = list(map(lambda q:q.key, question_count_response))

for key in question_keys:

print(f"question key: {key}")

except ApiException as e:

print("Exception when calling QuestionApi->start_batch: %s\n" % e)

# Build and execute the query to gather all questions for that question key.

# Write the header row for the spreadsheet

wr.writerow(['Layar ID', 'Document Title', 'Layar URL'] + question_keys)

# Define batch result parameters

offset = 0 # What document of the batch you want to start on (if the first document of the batch, offset = 0) -> important for pagination

batch_size = 50 #This is not exact. API will return up to this number, **per host**

while True:

# Build and execute the query for the documents in the saved list

body = layar_api.SourceDocumentSearchCommand(

saved_list_ids=['AYAJ2QNF9inKGTZtewDd'],

start=offset,

rows=batch_size,

sort='datePublished',

sort_order='desc',

source_fields=['name', 'id', 'documentURI'])

x_vyasa_data_providers = data_sources

documents = sourceDocApi.search_documents(body, x_vyasa_data_providers)

# Loop over the docs, writing the answers as we go

for document in documents:

row_details = [

document.id,

document.name,

document.document_uri

]

# Go get all answers for this document + batch

body = layar_api.AnswerSearchCommand(

batch_grouping_key=batch_key,

single_doc_question_document_id=document.id,

with_concepts=False,

rows=200

)

all_answers_for_doc = answerApi.search_answer(body=body)

total_answers = int(answerApi.api_client.last_response.getheader('x-total-count'))

if total_answers > 200:

print(f"Unexpectedly found a lot of answers to {len(question_keys)} questions for doc {document.id}")

# Get the answers per question key

for key in question_keys:

answers_for_q_key = [a for a in all_answers_for_doc if a.question_key == key]

if answers_for_q_key:

# Get the top 3 answers

answers_for_q_key.sort(reverse=True, key=lambda a: max(a.evidence, key=lambda e: e.probability).probability)

answers_for_q_key = answers_for_q_key[:3]

else:

answers_for_q_key = []

row_details.append(', '.join(map(lambda a: f"{a.text} ({a.evidence[0].probability})", answers_for_q_key[:3])))

print(row_details)

#Write the content to the CSV

wr.writerow(row_details)

offset = offset + batch_size

if len(documents) == 0:

break

except ApiException as e:

print("Exception when calling SourceDocumentApi->search: %s\n" % e)

Success!

You've gathered your batch, its question keys, the answers for each document in each question key, and then wrote all of the results into a CSV file. Once you've run this script, you should be able to find your results in a CSV named "resultsReport"!

Updated over 1 year ago

Next up, we'll learn how to take the answers from a Curate batch and use them to submit a new training run with the Vyasa deep learning model training pipeline!